In this article, I will improve the rain atmosphere effect I built in the previous article. This effect works by postprocessing a GBuffer in deferred rendering path, just before the light caluclation, where I increased a smoothness and decreased the GI and albedo.

There were two main issues I had to tackle:

- High smoothness resulted in flickering when using the bloom post process.

- All parameters were hard-coded, so I needed to make them accessible through the material’s properties.

Bloom flickering

High smoothness in the GBuffer can create very bright points on the screen in certain light conditions. It makes the bloom postprocess shimmer and flicker. Bloom flicker can be implemented by modifying the bloom effect itself or modifying its inputs. I decided to limit the smoothness value in the GBuffer to fix the issue. I used hardware blending for GBuffer modifications, which has limited capabilities and doesn’t allow me to limit the maximum value of the target texture. This can be fixed by copying the normal-smoothness buffer and sampling the copy to implement a custom blending in the fragment shader. I will keep the smoothness in the reasonable range in the shader that applies the effect.

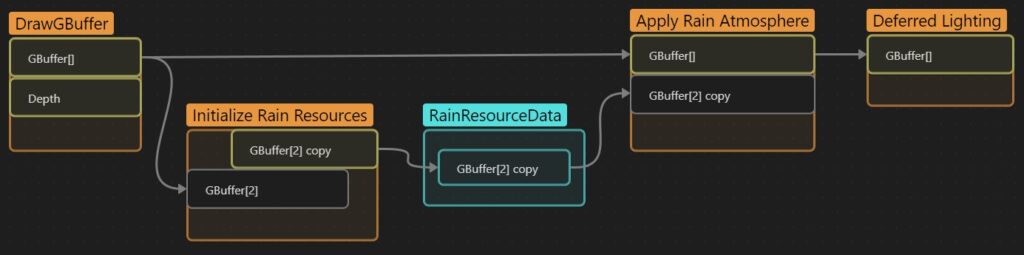

The current render graph looks like this (Apply Rain Atmosphere was implemented in the previous article).

I can add a custom node between DrawGBuffer and Apply Rain Atmosphere that will create a copy of the GBuffer[2] texture and share it with other passes. The pass responsible for that will be called Initialize Rain Resources. I will call the data shared between passes RainResourceData. Then, the Apply Rain Atmosphere pass will access the copy of GBuffer[2] and use it in the shader with custom blending.

My plan:

- Implement Initialize Rain Resources pass.

- Create a RainResourceData class to store resources shared between passes.

- Copy the GBuffer[2] contents into a custom texture using a custom shader.

- Access the copied GBuffer[2] in Apply Rain Atmosphere pass.

- Sample the copied GBuffer[2] in the fragment shader to implement custom blending and avoid bloom shimmer.

Implement resource initialization pass

My goal is to create a new texture in the Render Graph and copy the content of GBuffer[2] into this texture.

I created an InitializeRainResourcesPass and modified the RainAtmosphereFeature to execute it before the main pass. I’ve added a material I want to use with a custom shader to copy the GBuffer[2] contents.

public class RainAtmosphereFeature : ScriptableRendererFeature

{

public Material copyGBufferMaterial; // Added a material that will be used to copy GBuffer[2] contents.

public Material applyRainMaterial;

private bool HasValidResources => applyRainMaterial != null && copyGBufferMaterial != null; // Update validation

private InitializeRainResourcesPass initializeResourcesPass; // Added this pass to the render feature

private ApplyRainAtmospherePass applyRainAtmospherePass;

public override void Create()

{

applyRainAtmospherePass = new ApplyRainAtmospherePass();

initializeResourcesPass = new InitializeRainResourcesPass();

}

public override void AddRenderPasses(ScriptableRenderer renderer, ref RenderingData renderingData)

{

bool isGameOrSceneCamera = renderingData.cameraData.cameraType == CameraType.Game || renderingData.cameraData.cameraType == CameraType.SceneView;

if (isGameOrSceneCamera && HasValidResources)

{

initializeResourcesPass.material = copyGBufferMaterial; // Updated the material

applyRainAtmospherePass.material = applyRainMaterial;

renderer.EnqueuePass(initializeResourcesPass); // Enqueued as first pass

renderer.EnqueuePass(applyRainAtmospherePass);

}

}

}

// This pass will initialize the resources and copy the GBuffer.

public class InitializeRainResourcesPass : ScriptableRenderPass

{

public Material material { get; internal set; }

public InitializeRainResourcesPass()

{

this.renderPassEvent = RenderPassEvent.AfterRenderingGbuffer;

}

public override void RecordRenderGraph(RenderGraph renderGraph, ContextContainer frameData)

{

// TODO: Initialize shared resources

// TODO: Copy GBuffer[2] contents

}

}Shared resources

I started by creating a class that store the shared resources. Shared resources inherit ContextItem. They must release manually allocated resources in the Reset() function. In our case, we only need to store a TextureHandle – the copy of GBuffer[2].

// Resources shared between passes need to inherit from ContextItem

public class RainResourceData : ContextItem

{

// Handle for Gbuffer[2] copy.

public TextureHandle normalSmoothnessTexture { get; internal set; }

// And reset all resources using this method.

public override void Reset()

{

// Use TextureHandle.nullHandle to reset texture handles.

normalSmoothnessTexture = TextureHandle.nullHandle;

}

}I created the shared resources in the Initialize Rain Resources Pass using the frameData.GetOrCreate<>() API. After using this method, I can access those resources in other passes.

public override void RecordRenderGraph(RenderGraph renderGraph, ContextContainer frameData)

{

// Initialize the shared resources

RainResourceData rainResources = frameData.GetOrCreate<RainResourceData>();

...Accessing the GBuffer

I got the reference to the GBuffer and validated it to avoid executing the pass in a forward rendering path.

public override void RecordRenderGraph(RenderGraph renderGraph, ContextContainer frameData)

{

RainResourceData rainResources = frameData.GetOrCreate<RainResourceData>();

// Access URP GBuffer

var urpResources = frameData.Get<UniversalResourceData>();

var gBuffer = urpResources.gBuffer;

// Check if the GBuffer exists.

if (gBuffer == null || gBuffer.Length == 0 || gBuffer[0].Equals(TextureHandle.nullHandle))

return;Allocate GBuffer copy texture

I allocated the target texture using the renderGraph.CreateTexture(textureDescriptior) method. This method does not allocate the texture immediately, but it allows the Render Graph to manage the lifetime of the texture. Render Graph will ensure that the texture exists when our render function executes.

...

if (gBuffer == null || gBuffer.Length == 0 || gBuffer[0].Equals(TextureHandle.nullHandle))

return;

// Allocate the texture handle in the render graph.

rainResources.normalSmoothnessTexture = renderGraph.CreateTexture(gBuffer[2].GetDescriptor(renderGraph));Creating render pass

Then, I created a pass in the render graph. I used a raster render pass where I specified:

- Render attachment – our freshly allocated texture handle

- Dependencies – gBuffer[2] texture

- Render function

...

rainResources.normalSmoothnessTexture = renderGraph.CreateTexture(gBuffer[2].GetDescriptor(renderGraph));

// Create a render pass that will create a copy of GBuffer[2]

using (var builder = renderGraph.AddRasterRenderPass(GetType().Name, out PassData passData))

{

// Specify dependencies

builder.SetRenderAttachment(rainResources.normalSmoothnessTexture, 0);

builder.UseTexture(gBuffer[2]);

// Save data for render function

passData.gBuffer_2 = gBuffer[2];

passData.material = material;

// Set the render function

builder.SetRenderFunc((PassData passData, RasterGraphContext context) => RenderFunction(passData, context));

}

}

private static void RenderFunction(PassData passData, RasterGraphContext context)

{

// TODO: Implement the rendering here

}

internal class PassData

{

internal Material material; // Material used to copy the GBuffer

internal TextureHandle gBuffer_2; // Original GBuffer[2] handle

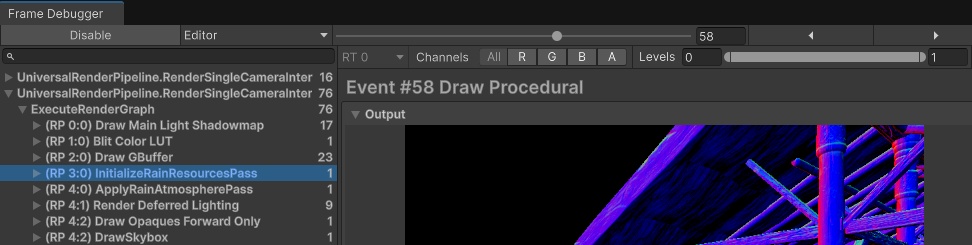

}Render function

Then, I implemented a render function. Raster Graph Context contains object pooling for PropertyBlocks – I used that to set the shader properties. _GBuffer2 property was set to the original texture. The shader used in the material will implement that property.

private static void RenderFunction(PassData passData, RasterGraphContext context)

{

// Get property block and set shader parameters.

MaterialPropertyBlock propertyBlock = context.renderGraphPool.GetTempMaterialPropertyBlock();

propertyBlock.SetTexture(Uniforms._GBuffer2, passData.gBuffer_2);

// Execute procedural fullscreen draw.

context.cmd.DrawProcedural(Matrix4x4.identity, passData.material, 0, MeshTopology.Triangles, 6, 1, propertyBlock);

}

// Utility class that I like to use to store shader properties.

public static class Uniforms

{

internal static readonly int _GBuffer2 = Shader.PropertyToID(nameof(_GBuffer2));

}Writing shader

The RenderGraph node is ready, and it’s time to implement the shader that copies the contents of the GBuffer. The shader should read the contents of the GBuffer texture and then output those in the fragment shader. I used the fullscreen draw template to achieve that.

Shader "Hidden/CopyGBuffer2"

{

SubShader

{

Pass

{

// Disable triangle culling and depth testing

Cull Off

ZTest Off

ZWrite Off

HLSLPROGRAM

#pragma vertex vert

#pragma fragment frag

#include "Packages/com.unity.render-pipelines.universal/ShaderLibrary/Core.hlsl"

// Fulscreen quad in clip space

static const float4 verticesCS[] =

{

float4(-1.0, -1.0, 0.0, 1.0),

float4(-1.0, 1.0, 0.0, 1.0),

float4(1.0, 1.0, 0.0, 1.0),

float4(-1.0, -1.0, 0.0, 1.0),

float4(1.0, 1.0, 0.0, 1.0),

float4(1.0, -1.0, 0.0, 1.0)

};

void vert (uint vertexID : SV_VertexID, out float4 OUT_PositionCS : SV_Position)

{

// Output a fullscreen quad.

float4 positionCS = verticesCS[vertexID];

}

void frag (out float4 OUT_Color : SV_Target0)

{

// TODO.

}

ENDHLSL

}

}

}Vertex Shader

I modified the vertex shader to output a UV calculated from the clip space. Clip space range is from -1.0 to 1.0, and UV is from 0.0 to 1.0, so I needed to remap those values.

On some platforms, UV starts at the top of the screen. In this case, Y-axis needs to be flipped.

// Add UV interpolator output to the vertex shader.

void vert (uint vertexID : SV_VertexID, out float2 OUT_uv : TEXCOORD0, out float4 OUT_PositionCS : SV_Position)

{

float4 positionCS = verticesCS[vertexID];

// Calculate screen UV from the clip space

float2 uv = positionCS.xy * 0.5 + 0.5;

#if UNITY_UV_STARTS_AT_TOP

uv.y = 1.0 - uv.y;

#endif

// Set position on the screen and UV

OUT_PositionCS = verticesCS[vertexID];

OUT_uv = uv;

}Fragment Shader

I declared Texture2D _GBuffer2 – the texture I assigned in the render function. I used the UV to sample the GBuffer and output its contents into the render attachment. An object-oriented HLSL syntax was used to sample the texture.

SamplerState pointClampSampler; // Point clamp sampler we will use to sample the GBuffer

Texture2D _GBuffer2; // GBuffer texture

void frag (in float2 IN_uv : TEXCOORD0, out float4 OUT_Color : SV_Target0)

{

// Use screen UV to sample and output the GBuffer contents

OUT_Color = _GBuffer2.SampleLevel(pointClampSampler, IN_uv, 0.0f);

}Now I could create a material with this shader and assign it to the renderer feature.

Access the copied GBuffer in ApplyRainAtmospherePass

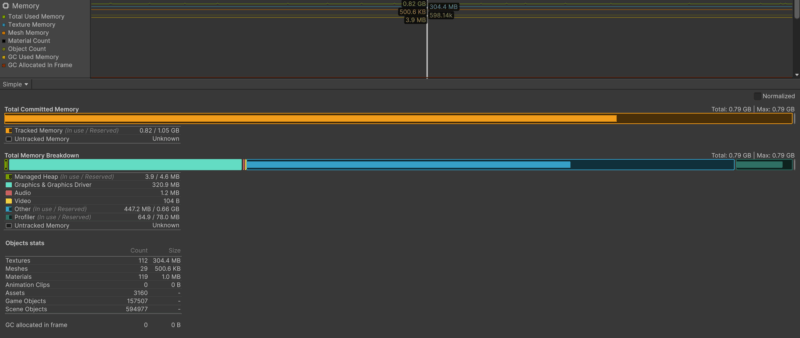

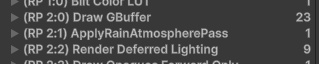

After assigning the material to the render feature, there is no InitializeRainResourcesPass in the Frame Debugger and Render Graph Viewer. It doesn’t execute at all.

Render Graph detects that I don’t use the created texture in other passes, so it culls the render node created in the InitializeRainResourcesPass. To fix that, I must declare the texture usage in the ApplyRainAtmospherePass. I modified the ApplyRainAtmospherePass by accessing shared resources with the frameData.Get<>() API and declared the texture usage.

public override void RecordRenderGraph(RenderGraph renderGraph, ContextContainer frameData)

{

...

// Get the rain resources

RainResourceData rainResources = frameData.Get<RainResourceData>();

using (var builder = renderGraph.AddRasterRenderPass(GetType().Name, out PassData passData))

{

// Declare the texture usage

builder.UseTexture(rainResources.normalSmoothnessTexture);

...

}

}And after this change, Frame Debugger and Render Graph Viewer correctly display the InitializeRainResourcesPass.

Right now, when I know that Render Graph properly executes the initialization pass, I can use the copy of the GBuffer in the ApplyRainAtmospherePass. I forwarded the texture handle to the passData, used in our render function.

...

builder.UseTexture(rainResources.normalSmoothnessTexture);

passData.gBuffer2 = rainResources.normalSmoothnessTexture; // Pass the texture to the render function

passData.material = material;

...internal class PassData

{

internal Material material;

internal TextureHandle gBuffer2; // Added the texture handle to the data used in the render function

}I used a property block from the render graph pool to set the texture in the shader.

private static void RenderFunction(PassData passData, RasterGraphContext context)

{

// Get property block from the pool and assign the texture.

MaterialPropertyBlock propertyBlock = context.renderGraphPool.GetTempMaterialPropertyBlock();

propertyBlock.SetTexture(Uniforms._GBuffer2, passData.gBuffer2);

context.cmd.DrawProcedural(Matrix4x4.identity, passData.material, 0, MeshTopology.Triangles, 6, 1, propertyBlock);

}All the data is set right now, so it’s time to adjust the shader.

Adjusting the rain atmosphere shader

My goal was to reduce the shimmering in the bloom postprocess. I could do that by implementing a custom blending for the smoothness stored in the alpha channel of the second GBuffer target. First of all, I needed to declare the texture in the shader.

SamplerState pointClampSampler; // Point clamp sampler we will use to sample the GBuffer

Texture2D _GBuffer2; // GBuffer textureThen, I needed a UV to sample the texture. I copied the vertex shader from the CopyGBuffer.shader.

void vert (uint vertexID : SV_VertexID, out float2 OUT_uv : TEXCOORD0, out float4 OUT_PositionCS : SV_Position)

{

float4 positionCS = verticesCS[vertexID];

float2 uv = positionCS.xy * 0.5 + 0.5;

#if UNITY_UV_STARTS_AT_TOP

uv.y = 1.0 - uv.y;

#endif

OUT_PositionCS = verticesCS[vertexID];

OUT_uv = uv;

}It is a good practice to turn off the hardware blending when switching to the software blending – it can save some GPU resources.

Blend 2 Off // Disable hardware blending for the 2nd target.The last step was to sample the GBuffer contents in the fragment shader and write a custom blending. I’ve found that additive blending for smoothness and clamping the result to a max of 0.86 works best in my case, so I hardcoded those values. This is a final fragment shader code:

void frag

(

in float2 IN_uv : TEXCOORD0, // Getting UV from the vertex shader

out float4 OUT_GBuffer0 : SV_Target0,

out float4 OUT_GBuffer2 : SV_Target2,

out float4 OUT_GBuffer3 : SV_Target3

)

{

// Access GBuffer[2] value

float4 rawGBuffer2 = _GBuffer2.SampleLevel(pointClampSampler, IN_uv, 0.0);

// Increase smoothness and clamp the value

rawGBuffer2.a = min(0.86, rawGBuffer2.a + 0.7);

OUT_GBuffer0 = float4(0.5, 0.5, 0.5, 1.0);

OUT_GBuffer2 = rawGBuffer2; // Write it back into GBuffer

OUT_GBuffer3 = float4(0.5, 0.5, 0.5, 1.0);

}I could test the results. As you can see, I successfully reduced most of the flickering.

You probably noticed that I needed to write much more code just to copy a texture. In the previous API I would use a CommandBuffer.Blit function to achieve that. Here I needed to explicitly create a separate pass. There are some utility methods to blit textures in Render Graph, but they still require creating a separate pass, custom shader, and managing the dependencies between passes – no way around that.

Shader Parameters

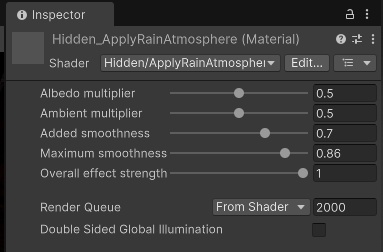

Because I hardcoded all values in the shader, I can’t adjust them outside the code. I will add parameters to the material to make the visuals easier to adjust:

- Albedo multiplier

- Ambient light multiplier

- Smoothness

- Max Smoothness

- Effect strength – controls the strength of the effect. 0 – no effect is applied, 1 – full effect is applied.

Let’s start by adding shader properties. I added this at the beginning of the shader.

Shader "Hidden/ApplyRainAtmosphere"

{

Properties

{

_AlbedoMultiplier("Albedo multiplier", Range(0.0, 1.0)) = 0.5

_AmbientLightMultiplier("Ambient multiplier", Range(0.0, 1.0)) = 0.5

_SmoothnessAdd("Added smoothness", Range(0.0, 1.0)) = 0.7

_SmoothnessMax("Maximum smoothness", Range(0.0, 1.0)) = 0.86

_EffectStrength("Overall effect strength", Range(0.0, 1.0)) = 1.0

}

...

Then, I included all those properties in the shader code, just above the fragment shader.

...

float _AlbedoMultiplier;

float _AmbientLightMultiplier;

float _SmoothnessAdd;

float _SmoothnessMax;

float _EffectStrength;

void frag

(

in float2 IN_uv : TEXCOORD0,

...Next, I replaced all parameters except _EffectStrength.

...

rawGBuffer2.a = min(_SmoothnessMax, rawGBuffer2.a + _SmoothnessAdd);

OUT_GBuffer0 = float4((float3)_AlbedoMultiplier, 1.0);

OUT_GBuffer2 = rawGBuffer2; // Write it back into GBuffer

OUT_GBuffer3 = float4((float3)_AmbientLightMultiplier, 1.0);

...To implement the _EffectStrength parameter, blending in the shader needs to do nothing when the value is 0. I implemented that using a Lerp function that interpolate all the values to the neutral ones. If the effect strength is set to 0, we must output 1 for the targets with multiply-blend and leave the smoothness as is.

...

// Lerp from original smoothness value to the custom one

rawGBuffer2.a = lerp(rawGBuffer2.a, min(_SmoothnessMax, rawGBuffer2.a + _SmoothnessAdd), _EffectStrength);

// Lerp from 1 to custom values in multiply-blended targets

OUT_GBuffer0 = float4((float3)lerp(1.0, _AlbedoMultiplier, _EffectStrength), 1.0);

OUT_GBuffer2 = rawGBuffer2;

OUT_GBuffer3 = float4((float3)lerp(1.0, _AmbientLightMultiplier, _EffectStrength), 1.0);

...Result

All parameters work nicely.

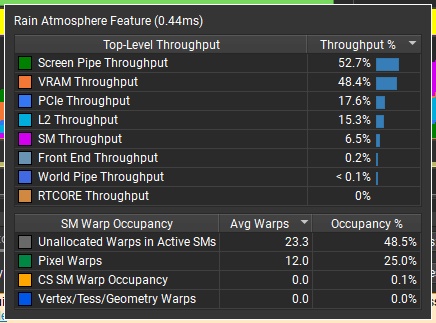

My rain atmosphere effect is ready. It takes only 0.44ms to render in 1440p on RTX 3060, increasing frame time from 7.77ms to 8.21ms (5.7% increase) – Measured with NVidia Nsight Graphics. The shader is mostly screen pipe bound – which means that most of the time is spent blending the GBuffer colors using the hardware units – I can’t get much faster than that!

About the author

Jan Mróz / Sir Johny – Graphics Programmer at The Knights of U. He specializes in rendering pipeline extensions and performance optimization across CPU, GPU, and memory. Sir Johny also leads tech-art initiatives that improve visual quality while cutting iteration time.

Learn more from Jan at proceduralpixels.com.